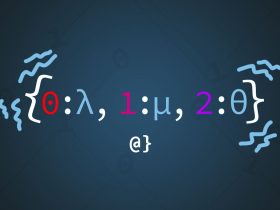

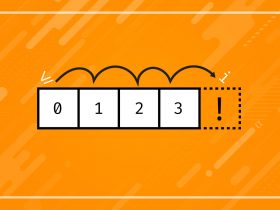

A byte array is a collection of contiguous bytes, which are datum most often made of eight single-bit units. That is, a byte contains a sequence of eight bits and a byte array contains a sequence of groupings of those eight-bit groupings.

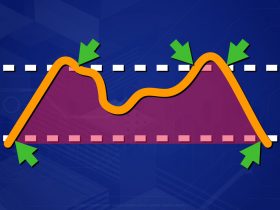

Byte arrays are often used to send data over networks in byte streams. This information format ensures maximal system compatibility and relies on end systems, rather than network infrastructure, to decode data.

Use-Cases

Byte arrays offer the advantage of allowing access to individual bytes within a larger collection. This affords developers easy access to inspection, often at the sacrifice of performance and memory overhead. For non-real-time conditions, this isn’t a practical concern but should be kept in mind.

For example, inserting new values into a mutable byte array object is somewhat inefficient. However, looking up values is very efficient as is deriving attributes such as size, length, or specific positioning of elements.

Important Considerations

Bytes are often assumed to contain 8 bits—this is not guaranteed, but practical. Byte streams could contain sequences of 16-bits, 32-bits, or some other arbitrary value chosen by developers. Eight-bit sequences are conventional enough to make an assumption but not conventional enough to make a guarantee.

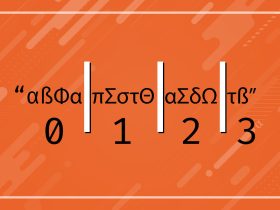

Eight-bit bytes can represent values up to 256 which limits the encodings that are available. For example, the ASCII character set contains values represented by 0-128.

In eight-bit representations of numbers, a maximum value of 256 is possible indicating that ASCII falls short of full utilization of possible characters. The ISO 8859-1 (Latin-1) standard established a full 256-character set to make full use of the range of values afforded by the 8-bit byte convention.

The representation of 256 characters makes the assumption that all values are positively signed. For positive and negatively signed values, a byte array can still represent a range of 256 values, but that range shifts from 0-256 to -127—127 where the first bit represents the sign (two’s complement.)

Encoding, decoding, and the use of different character sets are common among programming. These considerations are particularly prevalent among network programming applications, such as TCP/IP transfer protocol. Modern programming languages like Python, Java, and C++ all provide tools for developers to specify, convert, and manipulate data such that binary data can be appropriately interpreted. For example, Python has built-in functions for converting data to ASCII and Java has a powerful Sockets library that handles binary data transfer.

At the level of representing data in binary format, one must exert an expectation on what that data represents. For example, receiving a known string value would lead one to interpret a byte stream as positive values only. Receiving data via an unknown source might necessitate a bit of experimentation with encodings.