Python is a powerful toolset for data scientists and developers alike. Often, these two disciplines meet at the intersection that is converting data. One common conversion requirement is that of CSV data to JavaScript Object Notation (JSON) data. Fortunately, the Pandas library makes this conversion fast and efficient.

Pandas is a powerful data science library whereby developers and data scientists can access, analyze, and manipulate data efficiently and conveniently. Among the many convenient methods and functions found in the Pandas library is the to_json method. This method, found in the DataFrame class, is a powerful tool for converting data from CSV to JSON.

Project Setup

For this project, we’ll create some sample data of random people with information. We’ll use this handy Python script for generating random personal information, producing the following JSON-format data, saved as a local file named people.json.

[{"id": 1, "first_name": "Alice", "last_name": "Smith", "location": {"City": "Denver", "State": "Colorado"}, "age": 18}, {"id": 2, "first_name": "Bob", "last_name": "Anderson", "location": {"City": "Dallas", "State": "Texas"}, "age": 24}, {"id": 3, "first_name": "Alice", "last_name": "Jacobs", "location": {"City": "Los Angeles", "State": "California"}, "age": 18}, {"id": 4, "first_name": "Bob", "last_name": "Williams", "location": {"City": "Dallas", "State": "Texas"}, "age": 24}, {"id": 5, "first_name": "Pat", "last_name": "Anderson", "location": {"City": "Miami", "State": "Florida"}, "age": 60}]

With this data in hand, we can leverage the power of Pandas to conveniently load our data in CSV format as a DataFrame object, from which we can then convert to CSV as desired.

Step 1: Load JSON into DataFrame

DataFrames are powerful tools for managing 2-dimensional data (or higher-dimensional data reduced as such) with much attention to low-level optimizations. A DataFrame can also quickly and efficiently export data as CSV files — which is what we’re after. We can load the people.json file data as a DataFrame as such:

# Load via context manager and read_json() method

with open('people.json', 'r')as file:

df = pd.read_json(file.read())

# view result

print(df)

id first_name last_name location age

0 1 Alice Smith {'City': 'Denver', 'State': 'Colorado'} 18

1 2 Bob Anderson {'City': 'Dallas', 'State': 'Texas'} 24

2 3 Alice Jacobs {'City': 'Los Angeles', 'State': 'California'} 18

3 4 Bob Williams {'City': 'Dallas', 'State': 'Texas'} 24

4 5 Pat Anderson {'City': 'Miami', 'State': 'Florida'} 60

This gets us pretty close but there are two noticeable issues, one being of grave importance:

- The

locationcolumn contains nested JSON data that didn’t import properly. - The

idcolumn should could be used to index our data (optional).

To approach the first issue, we’ll have to modify the approach by which we loaded our data.

Step 2.a: Deal with Nested JSON Data

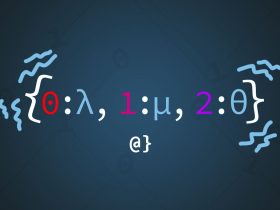

In the first step, we loaded our data directly via the read_json function in the Pandas library. This takes the raw JSON data and loads it directly into a DataFrame. To load nested JSON as a DataFrame we need to take advantage of the json_normalize function. However, this function takes a dict object as an argument. As such, we need to first load the JSON data as a dict as such:

import json

# Load via context manager and read_json() method

with open('people.json', 'r')as file:

# load JSON data and parse into Dictionary object

data = json.load(file)

# Load JSON as DataFrame

df = pd.json_normalize(data)

# Print Result

print(df)

id first_name last_name age location.City location.State

0 1 Alice Smith 18 Denver Colorado

1 2 Bob Anderson 24 Dallas Texas

2 3 Alice Jacobs 18 Los Angeles California

3 4 Bob Williams 24 Dallas Texas

4 5 Pat Anderson 60 Miami Florida

Here we see the keys of the location field automatically being converted into dot-delimited column names.

Step 2.b: Reindex

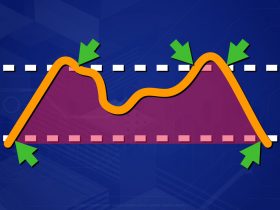

This data will produce an extra column if exported directly to CSV (the index column.) There are several ways in which one could approach handling this, but a convenient means is the setindex method — it will create a visual representation of the change in the DataFrame as well. This is achieved as follows:

# set index

df.set_index('id', inplace=True)

# view result

print(df)

first_name last_name age location.City location.State

id

1 Alice Smith 18 Denver Colorado

2 Bob Anderson 24 Dallas Texas

3 Alice Jacobs 18 Los Angeles California

4 Bob Williams 24 Dallas Texas

5 Pat Anderson 60 Miami Florida

Step 3: Export to CSV File

At this point, our data is ready to be exported to a CSV file. Fortunately, Pandas has methods to both load and save CSV data. We can output our DataFrame to a CSV format file using the to_csv method as follows:

import os

# output DataFrame to CSV file

df.to_csv('people.csv')

# view file metedata

print(os.stat('people.csv'))

os.stat_result(st_mode=33206, st_ino=56294995342297639, st_dev=2997963831, st_nlink=1, st_uid=0, st_gid=0, st_size=231, st_atime=1657209167, st_mtime=1657209167, st_ctime=1657209167)

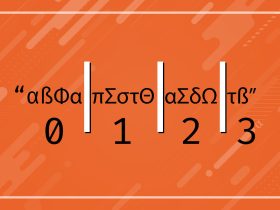

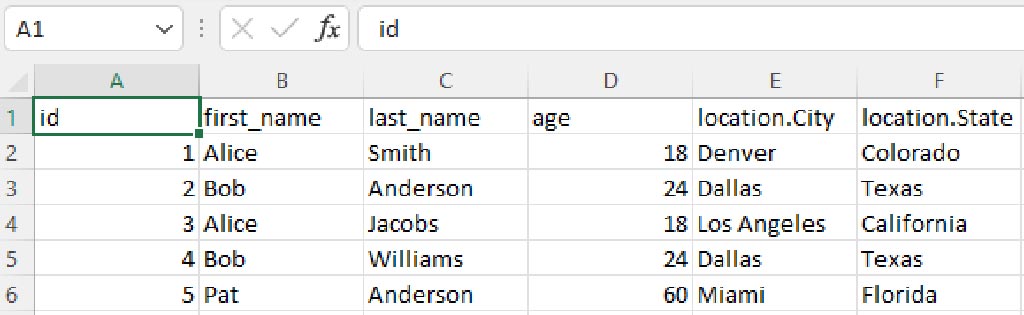

Here we save the data to a local file named people.csv and also confirm some file metadata after the process including created time and filesize. Let’s take a look at the data loaded in Microsoft Excel to see what our conversion process has produced:

Here we see the Microsoft Excel version of our DataFrame represented as expected. This confirms that we have, in fact, converted JSON to CSV data using Pandas’ DataFrame as well as the normalize_json and to_csv methods.

Common Issues

One often encounters issues when working with JSON formatted files in Python. The json library — part of the Python standard library — provides useful tools for addressing such issues. However, sometimes the syntax and logical flow of these tools can be unclear which leads to exceptions of which can cause confusion. Below are some common issues.

json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)

In most cases, this error results from attempting to use the json.loads() method on the filepath itself, rather than the contents of the file. For example, the following approach is incorrect:

# Trying to load the filepath

df = pd.read_json(json.loads('people.json'))

# Resulting Exception

json.decoder.JSONDecodeError: Expecting value: line 1 column 1 (char 0)

The correct approach would be to first create a file pointer to reference the file object as such:

# use context manager and get reference to file object

with open('people.json', 'r')as file:

# use the loads() method on actual file data

data = json.loads(file.read())

Note that using the read_json method is used in a similar method.

Final Thoughts

Pandas is an incredible tool for data scientists and hobbyists alike. Its powerful low-level optimizations make crunching numbers fast and its well-conceived functions make retain a syntactic simplicity of which one can stand in awe. There are plenty of considerations to make when dealing with data such as replacing missing entries, tabulating JSON data when necessary (similar to the CSV conversion process), and even aggregating time series data. Pandas provides tools for handling all these concerns and more!