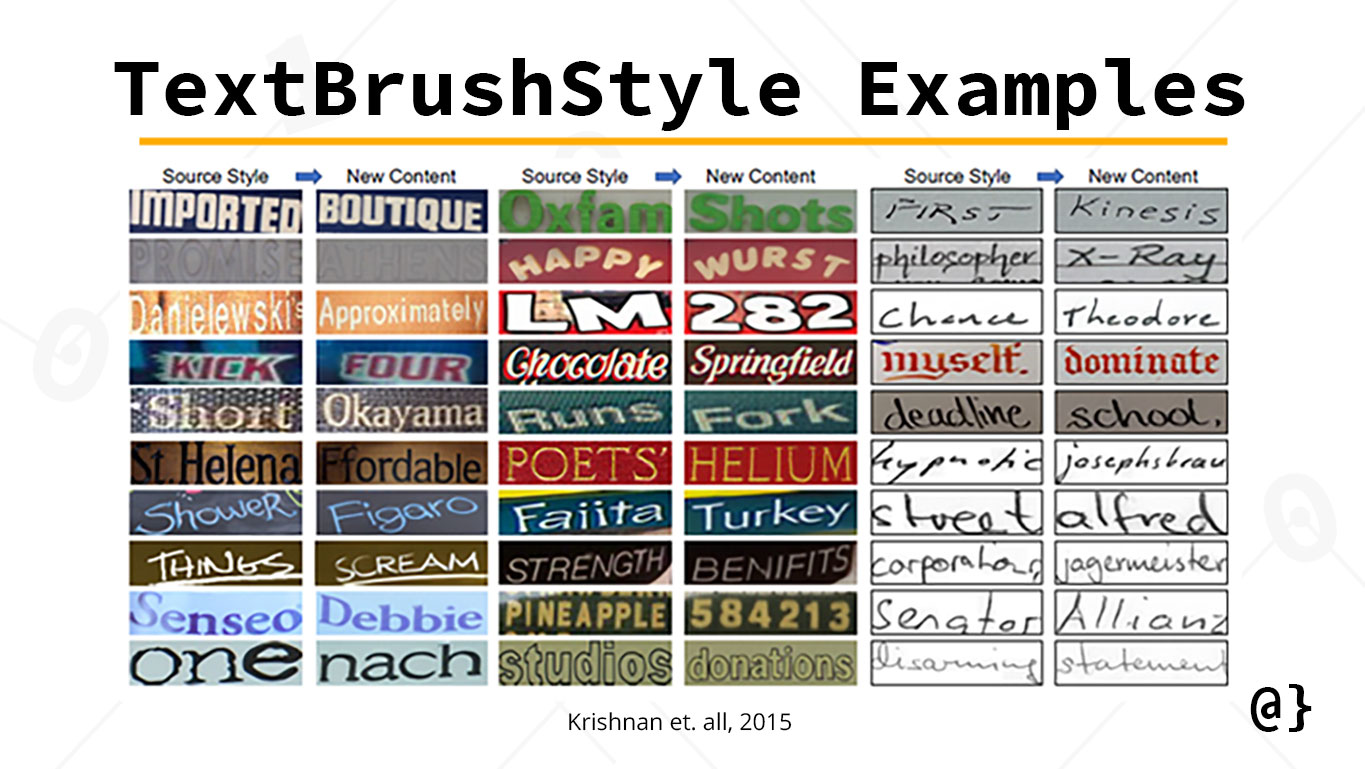

Facebook recently announced their latest AI-driven project TextStyleBrush, a self-supervised model that can copy the style of text in a photo using a single sample. This tech stands to support breakthrough applications in fields of real-time data analysis, deepfake detection, and consumer-level visual imaging technologies.

The technology behind TextStyleBrush was detailed in a recently-made-public paper from Facebook’s own AI Lab. Facebook details the novelty of TextStyleBrush being found in the ability to address a wide range of general-purpose uses. Previous image-recognition software was very domain limited, restricting its adoption within commercial applications.

Praveen Krishnan, lead author, and post-doc research at Facebook had the following to say:

Our disentanglement approach is further trained in a self-supervised manner, allowing the use of real photos for training, without style labels.

Facebook has no qualms in admitting this project is still in the early stages of research. They express their motivation for publicizing their technology as a means to unite researchers to develop technology to help combat future misuse—such as the generation of deepfake technology.

Applications

Facebooks TextStyleBrush is largely billed as another stride in developing technology to better combat the rise of deepfake technologies. Deepfakes, a term used to describe artificially generated text, images, and video purporting false realities, are an emerging technological threat relevant on a global scale. Facebook has described their efforts to combat deepfake technology-focused of visual manipulation of human faces in images previously. In this newest advancement, they describe TextStyleBrush’s application in combatting Deepfakes as such:

If AI researchers and practitioners can get ahead of adversaries in building this technology, we can learn to better detect this new style of deepfakes and build robust systems to combat them.

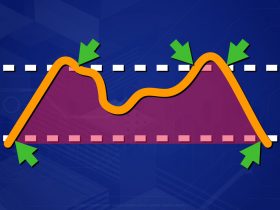

Another possible application of TextStyleBrush is the real-time translation (both language and data-driven) of textual information in augmented reality environments. Augmented reality represents a particular challenge to traditional AI models that rely on carefully labeled datasets to be trained.

Source: Faceook AI Labs

Facebook’s new TextStyleBrush is a self-supervised model with much more general applications in mind. This could be used to help translate the names of road signs for automated driving technology, real-time translation of international signs, and even such imaginative devices as AR glass that translate text in real-time. Imagine, seeing the text on the pages of a book change to your native language as they turn!

Among other novel applications the authors of one paper behind TextBrushStyle’s technology detail the following possible use-cases:

Our method aims at use cases involving creative self-expression and augmented reality (e.g., photo-realistic translation, leveraging multi-lingual OCR technologies). Our method can be used for data generation and augmentation for training future OCR systems, as successfully done by others, and in other domains. … Our method can also be used to create training data for detecting fake text from images.

Background

Images generated by machine learning algorithms and other artificial intelligence (AI) technologies have greatly advanced in accuracy and complexity in recent years. Positive and negative outcomes have arisen from this development including such technologies as improved medical screening tests and also more troublesome “deep fake” data.

Tech Giants like Google, Apple, and Facebook—each with access to massive troves of human-generated data—are among the leaders of developing these technologies. Google-funded DeepMind’s successes in solving protein-folding prediction are one example of the progress such companies are making in the field of AI. TextStyleBrush is only the latest such advancements from the AI labs of Facebook.

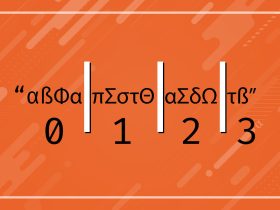

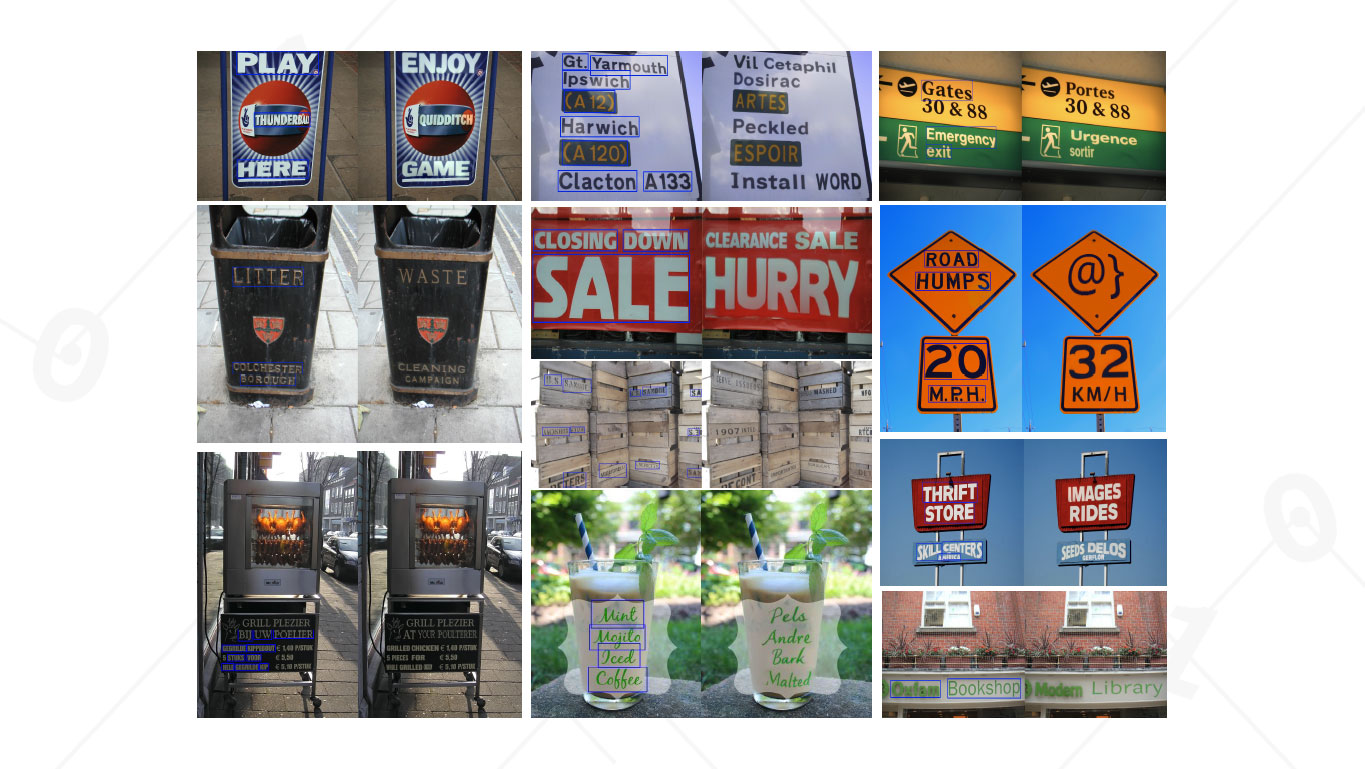

Imaging applications have long since been a focal point of AI-based technology. Handwritten letter recognition has even emerged as the canonical “learn machine learning” project for beginners and advanced students alike. AI systems have tackled these types of problems in very specialized approaches in the past—requiring somewhat explicit data labeling, large training sets, and rigid parameter adjusting.

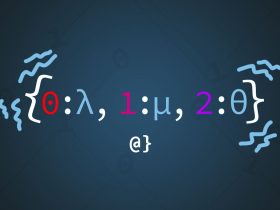

Facebook’s TextStyleBrush moves beyond these approaches, much by necessity given the nature of the data, to generate similar-style texts from only a single reference image. In other words, show the model a single image of a word written in your own handwriting and it can generate unlimited additional text in an eerily similar visual style. In Facebook’s press release they describe TextStyleBrush as follows:

It works similar to the way style brush tools work in word processors, but for text aesthetics in images. It surpasses state-of-the-art accuracy in both automated tests and user studies for any type of text. Unlike previous approaches, which define specific parameters such as typeface or target style supervision, we take a more holistic training approach and disentangle the content of a text image from all aspects of its appearance of the entire word box.

Facebook’s TextStyleBrush marks yet another breakthrough in the AI field characterized by a significant reduction in required training data. Another such breakthrough, publicly detailed in December 2020, came from the press offices of popular GPU manufacturer NVidia. This breakthrough detailed a new technique for image processing in Generative Adversarial Networks (GAN) they coined adaptive discriminator augmentation (ADA). Using ADA, researchers were able to reduce the number of required training data images by as much as 2000%.

AI Research

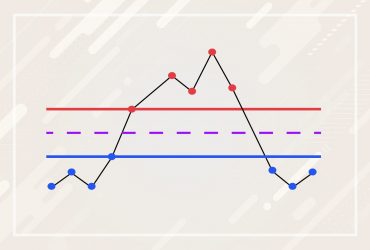

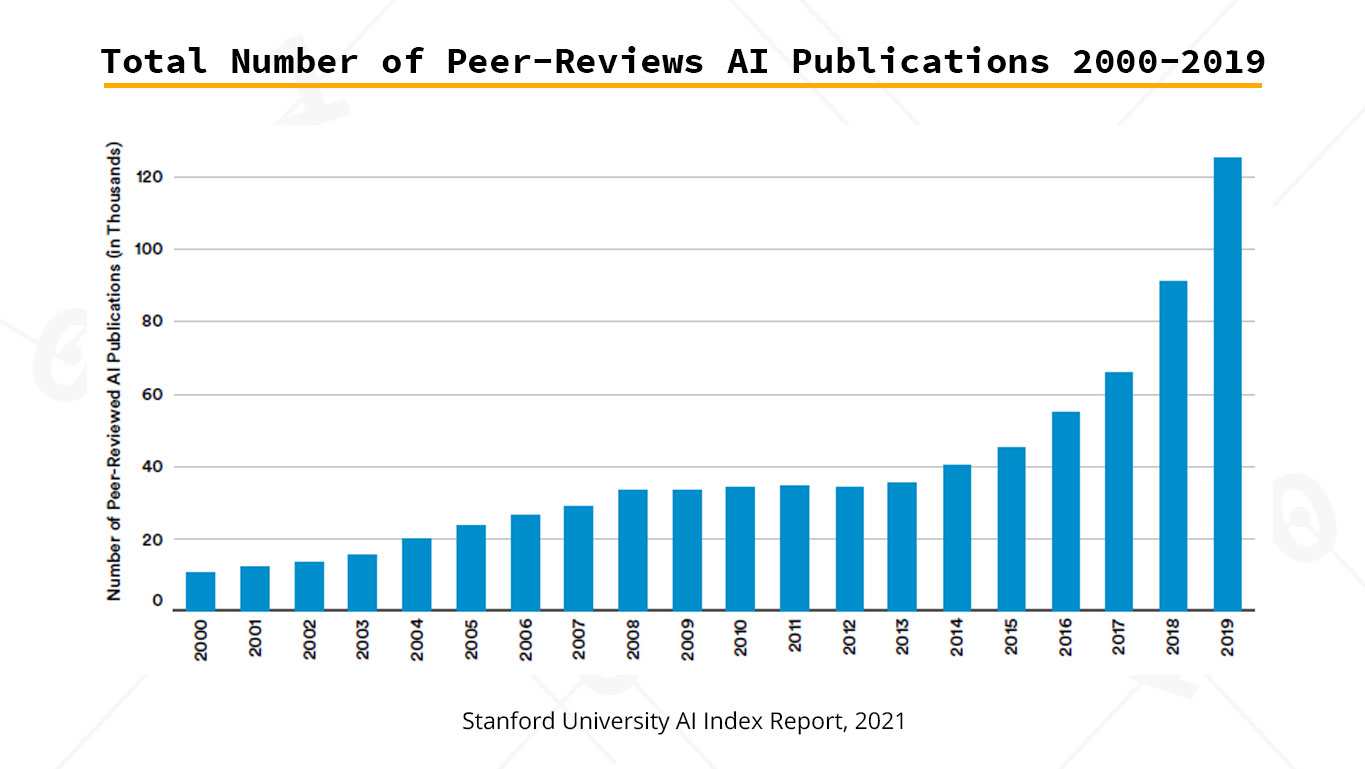

Artificial Intelligence is a rapidly expanding field of academic study. While breakthroughs in academia are not always fast to translate into commerce, the prevalence of study can be regarded as a functional proxy. That is, more research is likely to translate to more consumer applications. Standford University’s 2021 AI Index Report noted a 1200% increase in AI publications in peer-reviewed journals from 2000-2019. See the chart below:

East Asia & the Pacific regions have reigned kings of publications internationally with Europe, Central Asia, and North America nipping at their heels. Regions of Africa and South Asia have seen the highest degree of relative growth in AI research in the past 10 years, increasing by nearly 700%.

At this point, it’s anyone’s guess as to whether the willingness of corporations like Facebook to make their internal research publicly available will have unpredictable effects on the pace of academic research. It may be that post-docs decide they want bigger pay-checks and more flexible working hours!

Future Study

The authors of the paper detailing the technology behind TextBrushStyle close by remarking on some known shortcomings. Among them, accuracy failures for complex text styles with damaged text (holes in signs), overly unique texts (not many related samples likely), and inconsistent color grading among source images.

The authors also note a particular difficulty in accurately representing text based on source text written on metallic surfaces. Given the black-box nature of many learning models, it is hard to wager a guess as to why these particular contexts present the model with trouble.

References

- Krishnan, Praveen, et al. “TextStyleBrush: Transfer of Text Aesthetics from a Single Example.” Journal of Latex Class Files, vol. 14, no. 8, 2015, arxiv.org/abs/2106.08385v1.

Note: The Journal of Latex Class Files is not an actual journal but rather an automated notation generated by IEEE Author publishing templates for use with LaTeX containing documents. It was cited here for both humor given the FB paper retained this notation of the header as well as education.