Computer number formats are at the core of computer science. While not as heavy in theory as some other concepts, the practical implications of how numbers get represented in computers has drastic consequences in computing.

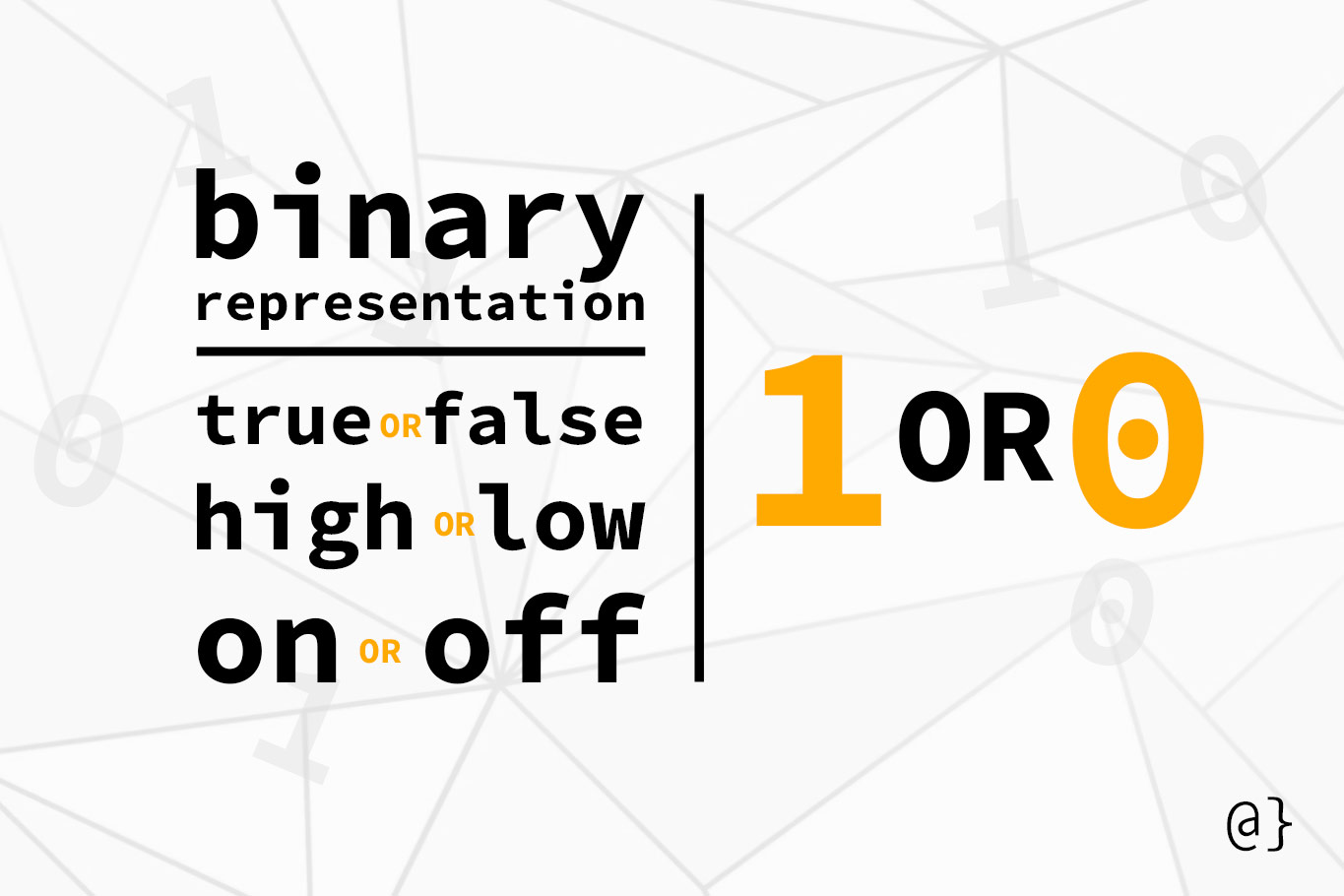

Binary is often easily recognized, even by non-computer scientists, as it forms the basis of most modern computing numerical systems. However, numerical representation is a bit more complicated than just converting things to 1's and 0's. Ther are octal, hexadecimal, floating points, doubles, longs, shorts—the list goes on. At the heart of it all, however, are ones and zeroes.

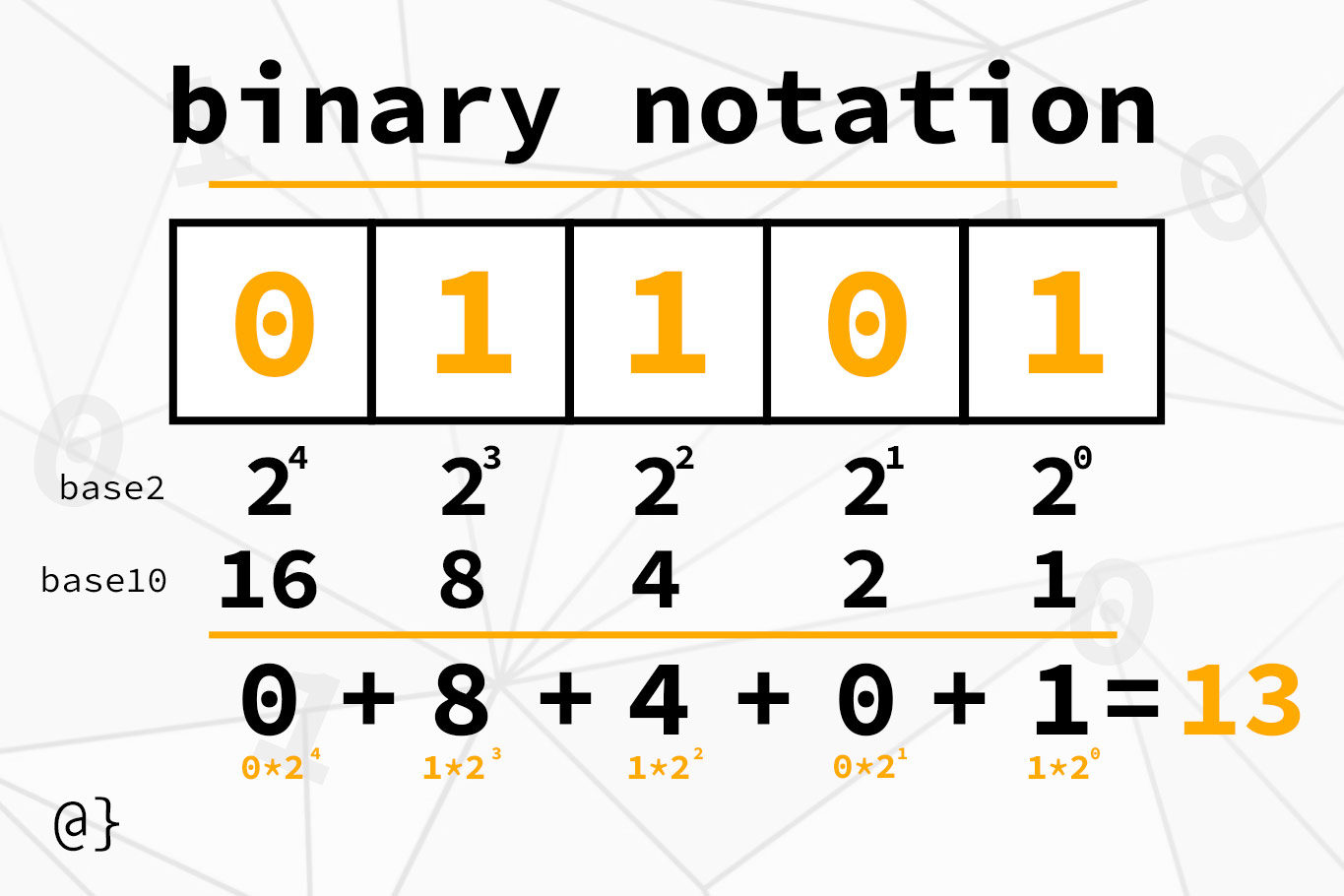

Binary Notation

Binary notation is a system by which numbers are represented using only 1’s and 0’s. This is also referred to as Base2 notation, as opposed to Base10 that everyone is familiar with. Conceptually, binary means counting in powers of two whereas base10 notation means counting in powers of 10. It’s a simple concept but can give rise to some complicated situations.

Signed vs. Unsigned

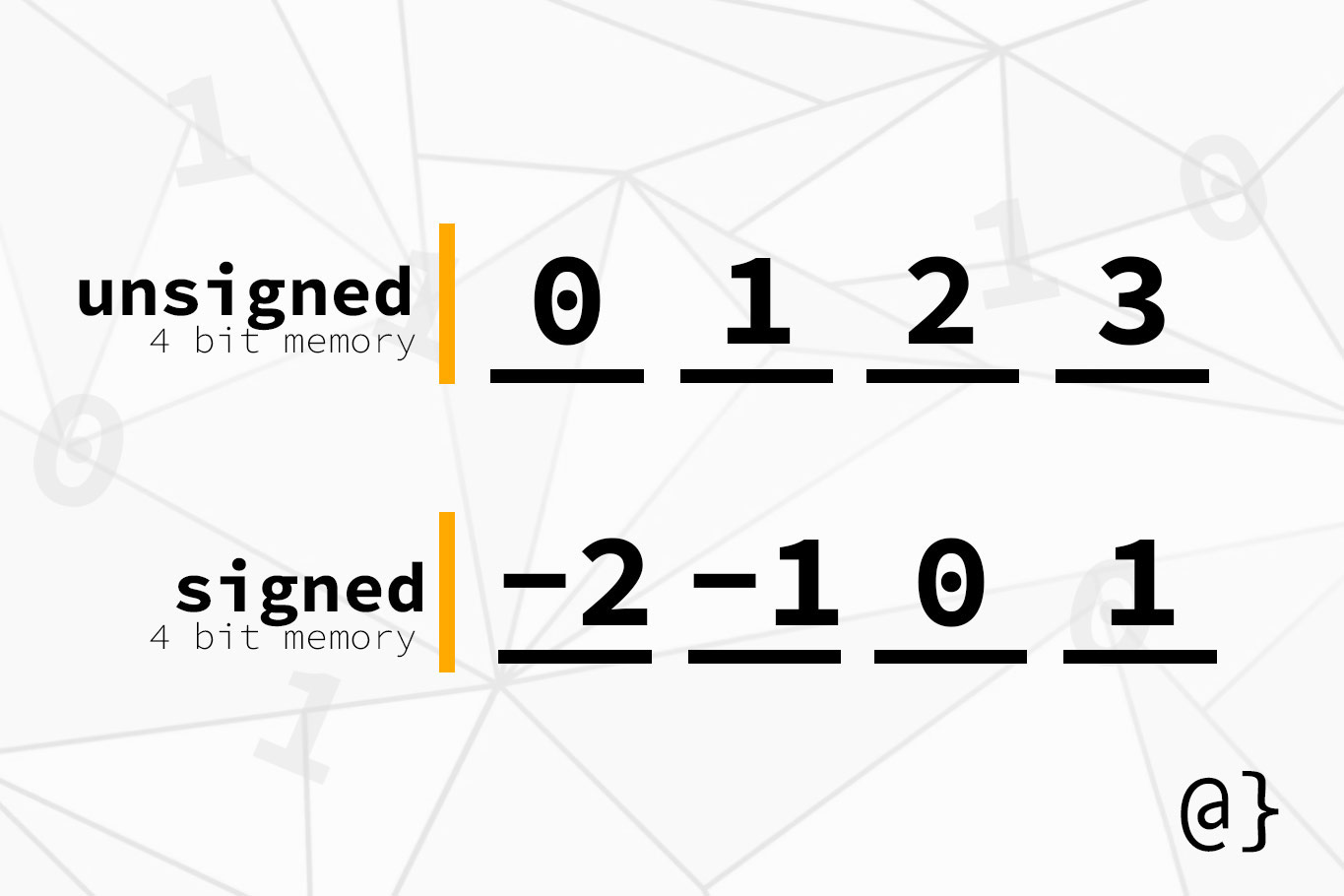

Computer scientists represent numbers in many ways. Two basic variations are signed and unsigned notations. These are simple accommodations to allow higher maximum values when only positive numbers are required for calculations. Consider the following:

Signed Notation is the formal terminology that means both positive and negative. The word “signed” simply refers to the plus or minus sign commonly used to denote positive and negative values.

Unsigned Notation is the formal terminology that means only positive values. This means of representation is used to accommodate higher maximum values when it’s known that negative values will not need to be represented.

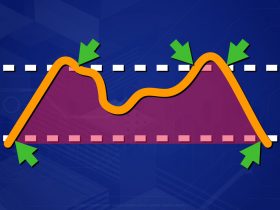

When a numeric representation system needs to support both positive and negative values, the maximum value possible within the system is effectively halved. In most cases, the maximum value becomes max / 2 - 1.

In many cases, the absolute value of the maximum value equals the absolute value of the minimum value, minus one. The issue arises because 0 must be accommodated, such that either the smallest or largest value must be dropped to make room, while still fitting into the available slots.

Range of Numbers

The range of numbers able to be represented by computers is directly related to the size of available memory. For example, 32-bit memory architecture provides 32 bits with which numbers can be represented (4 bytes) and 64-bit architecture provides 64. Simple enough, right?

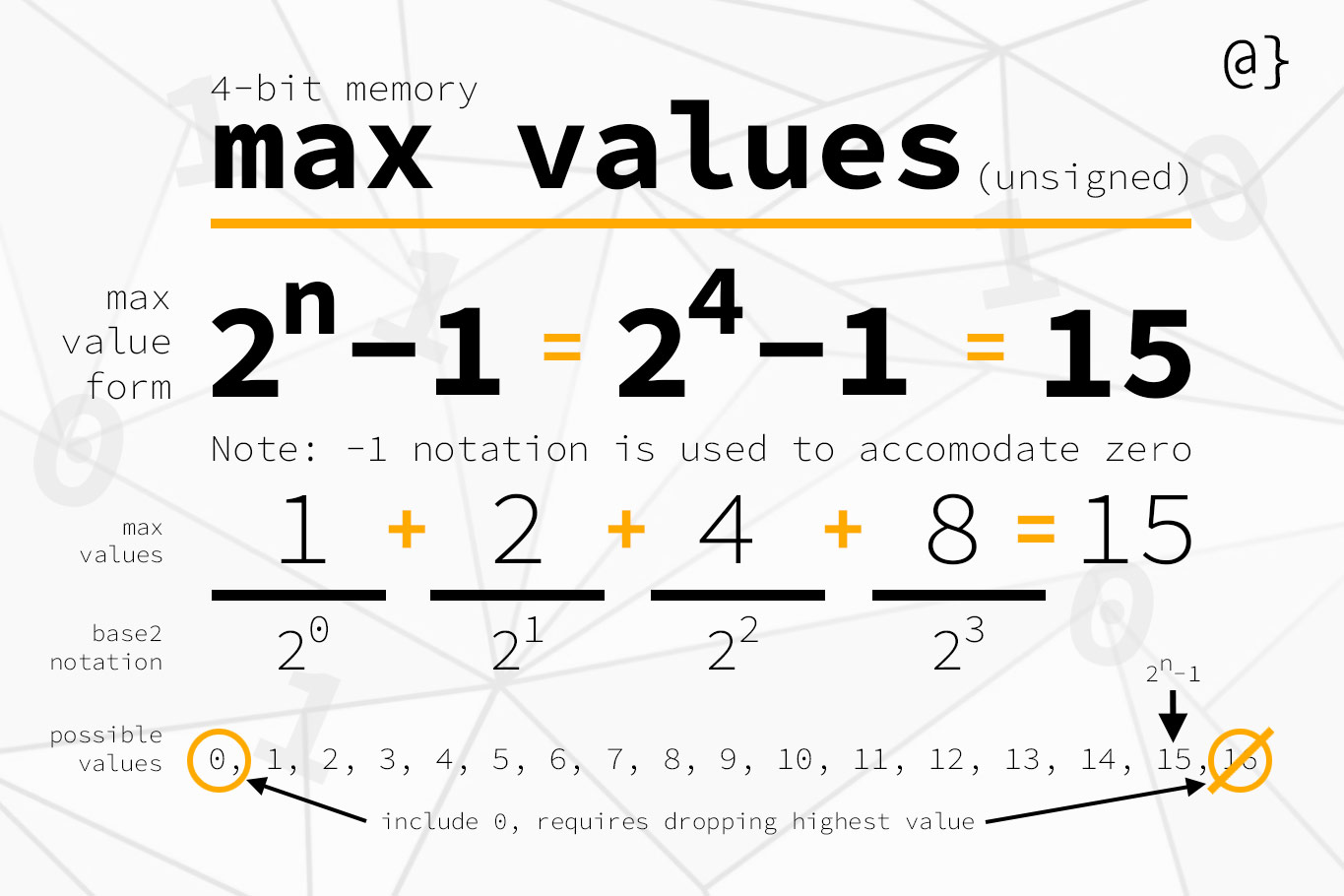

Given what we now know about binary representation, we can calculate the largest number a 32-bit system can represent in binary would be 32-1’s or 232 which is 4,294,967,296. However, this isn’t correct yet—we need to be able to accommodate 0 in our numbers.

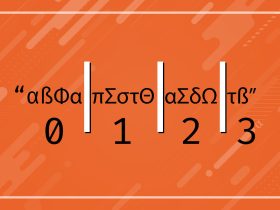

To make room for zero, computer scientists simply subtract 1 from the max value. This results in a range of 0 to 4,294,967,295. An abbreviated example of this can be seen with a 4-bit memory architecture in the following illustration:

This is the standard range for an unsigned integer in languages like C++ (R). The term unsigned means that all numbers are assumed positive. This is represented slightly differently for signed values.

For signed values—numbers having a positive or negative sign—the range of numbers is still the same amount, but it’s split between positive and negative values. The 0—232 -1 range becomes -231 — 231 -1 or -2,147,483,648 — 2,147,483,647. Again, note the reduction of the max value by 1 to accommodate 0.

The general form for calculating the range in numbers for a signed number, given the maximum number of available bits (M) is as follows: -2m-1 — 2m-1 – 1.

Where 32 bits is the max number of bits available, our max value becomes 31. So, for example, in modern systems where 64-bit memory allows 263 bit representation, the range of possible signed values becomes -9,223,372,036,854,775,808 —9,223,372,036,854,775,807.

Work in Progress

This post is a work in progress and currently represents an incomplete discussion. Future points of focus include the following areas of numerical representation:

- Hexadecimal

- Octal

- Converting Between Bases

- Floating Point Numbers

Discussion

Numbers are all around us but don’t exist tangibly. We are forced to represent them in abstract ways ranging in complexity from fingers and toes to quantum spin. The dynamics of how numbers interact, and the implications therein, is essential to any strong understanding of computer science.

The methods of numerical analysis discussed here should not be considered comprehensive. Rather, Binary, Octal, Hexadecimal, and Floating-Point representations are merely foundational approaches to representing numbers in computers. Each exist for their ability to offer merit in particular situations and understanding when and where to use them can help better tackle some of the most lingering problems in the field of computer science.