Propagation Delay is the time it takes for a single bit to transfer from sender to receiver within a network. The bit propagates as the propagation speed of the network link and is dependent on the medium through which it travels.

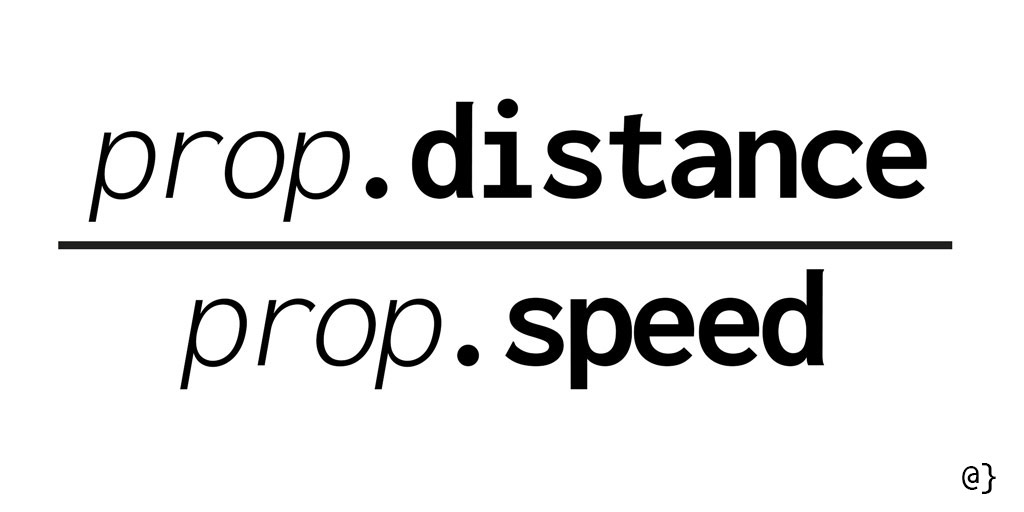

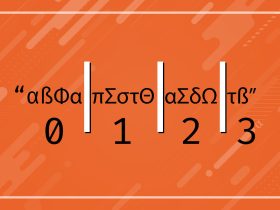

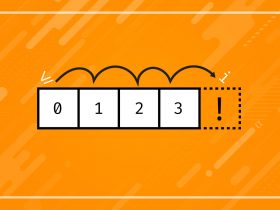

For example, a fiber optic line will greatly reduce propagation speeds compared to copper lines. The network propagation speed is calculated by dividing the distance between two routers by the propagation speed of the link, as illustrated below: