TCP Flow Control uses a system of buffers and variables to determine how quickly data can be transmitted. The underlying concept is that a receiving end system is able to notify the sending end system that its application is unable to keep up with the transmission rate.

Flow Control is not the same as TCP’s congestion control system—a system for detecting network issues. Rather, TCP’s flow control system is designed to detect end-system issues.

All About Buffers

Transmission Control Protocol (TCP) involves a series of buffers for both senders and receivers. These buffers serve as intermediaries between the transport layer and the application layer. TCPs flow control mechanisms leverage the dynamics of these buffers to create a speed matching service to prevent packet overflow.

When an application layer sends data packets via TCP, these data are placed in a send buffer. When an application receives data, those bits are placed into a buffer prior to being read.

When a sender transmits data faster than a receiver application can consume from its receiving buffer—you get buffer overflow (not to be confused with integer overflow.) TCP flow control is designed to avoid this and, when experienced, adjust the transmission rate on the sender side to compensate.

Buffer Feedback

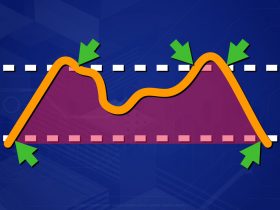

In the case of receiving data; data placed into the buffer has been deemed valid and in-sequence by TCP’s reliable data transfer (RDT) services. Receiving buffers may receive data faster than applications are able to handle. In such cases, a sender could quickly overflow the receiver’s buffer if a control mechanism were not in place.

TCP’s flow control service helps prevent this from happening. Conceptually, TCP flow control is a speed matching service that helps a sender rate-limit data transmission to match the receiver’s capacity to process it’s receiving buffer.

TCP’s send rate can be throttled by congestion control measures as well but these are separate from flow-control measures and descriptive of network conditions—not end system bandwidth.

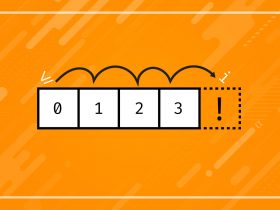

Sliding Windows

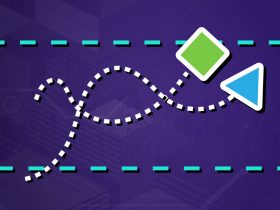

TCP uses what’s known as a sliding window protocol to determine the total amount of data being transmitted. This measure helps control the number of unacknowledged packets being transmitted. In other words, when to stop sending and wait to make sure things are being received.

TCP does this by establishing a “window” in which a sequence of packets are “authorized” to be sent. When a sending system receives an acknowledgment (ACK) from the receiving system, the window is incremented to authorize new packets for sending.

There are several strategies by which the receive window is utilized, including go-back-n and selective repeat but its fundamental function remains the same: determining which packets are being sent. Let’s take a closer look at the receive window.

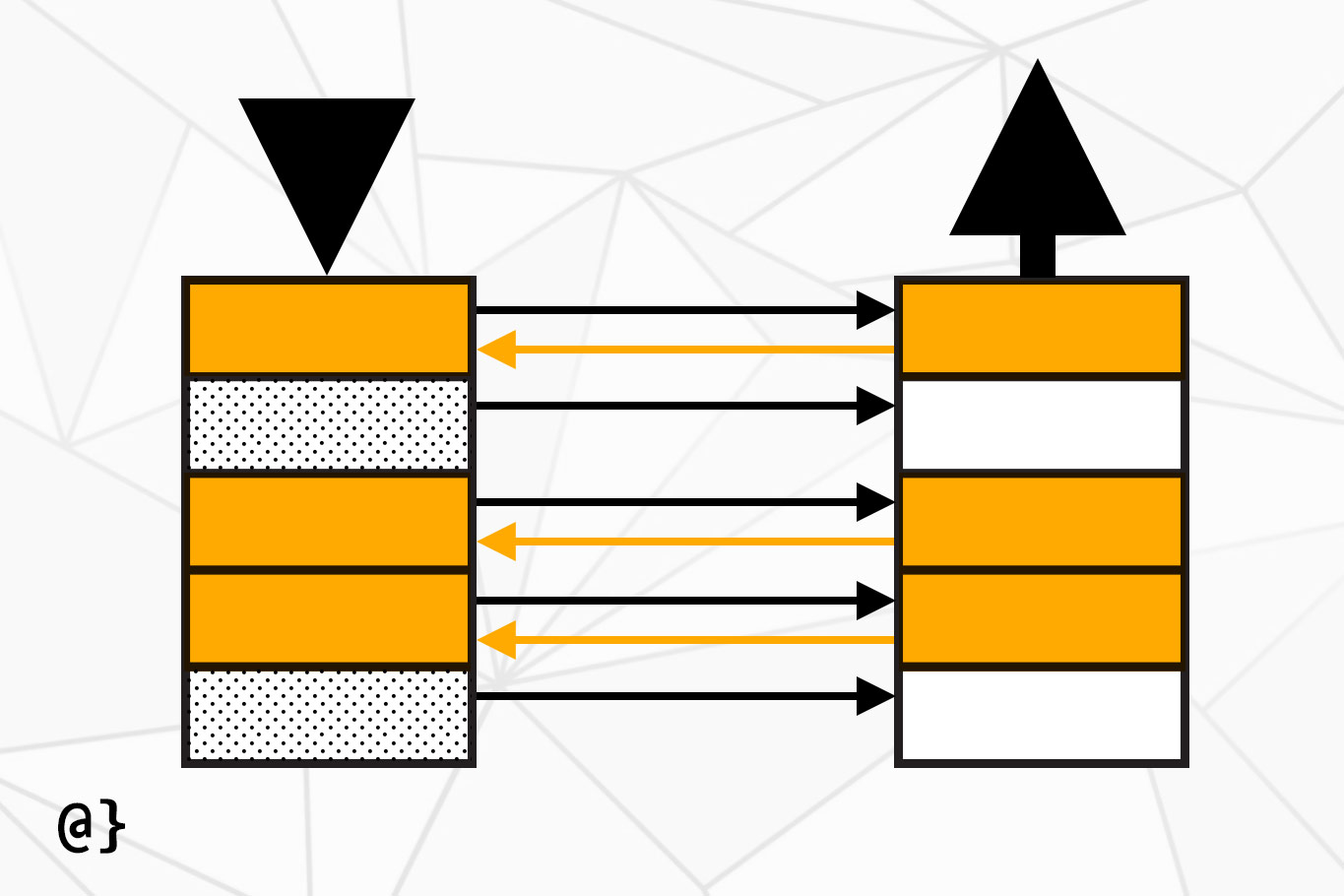

Receive Window

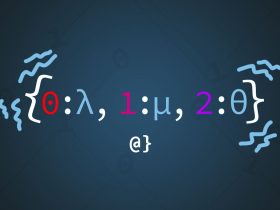

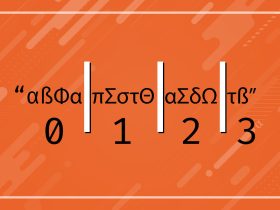

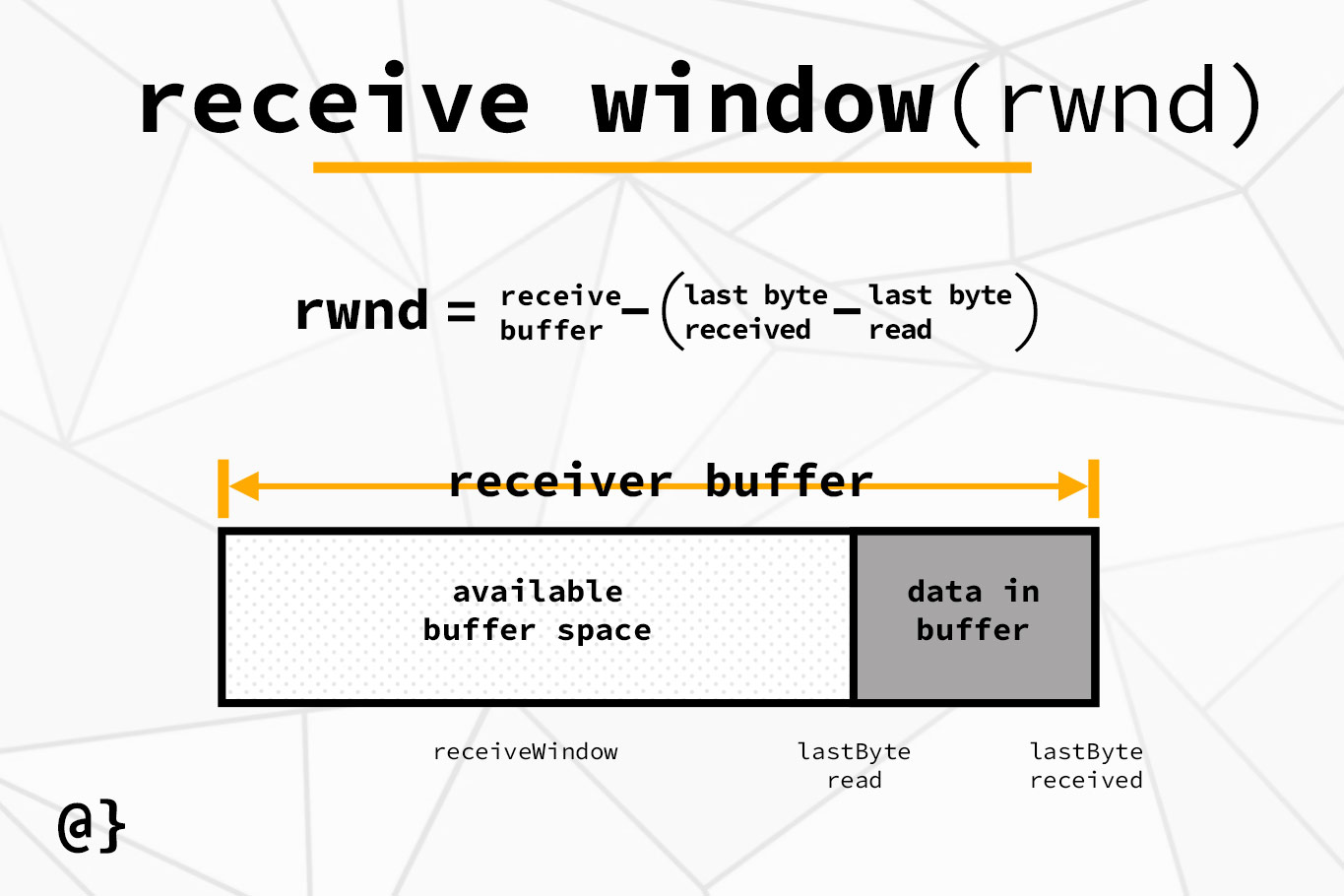

TCP’s Flow Control is maintained by a variable, on the sender side, called the receive window. This value essentially tracks the amount of space left in the buffer on the receiver side after the existing data is accounted for. The formula for calculating the receive window is rwnd = receiveBuffer - (lastByteReceived - LastByteRead). This is illustrated as such:

The receiveWindow variable is constantly updated and reported to the sending system via the window segment of the TCP header. Keeping the above illustration in mind, there are some important relationships that must be maintained between the receiveBuffer, receiveWindow, lastByteRead, and lastByteReceived.

When the receive buffer is full, the receiver sends a receiveWindow = 0 notice to the sender. This creates a problem once the receiver’s application has consumed the remaining buffer data; there’s nothing left to send back to the initial sender in terms of AKC’s. This would effectively transition the sender to an indefinite state of waiting—a firm block of data transmission.

To get around this issue, TCP makes explicit consideration by dictating the sender will continue to send a single bit of data to the receiver. This minimizes strain on the network while also maintaining a constant check on the status of the receiving buffer in a way that would return an ACK as soon as things free up!

Review

TCP offers flow control mechanisms to ensure a receiving application doesn’t collect data faster than it’s able to consume it. This mechanism relies on the window field in the TCP header and provides an effective speed-matching system to help support TCP’s reliable data transport service.