Cache memory is a type of computer memory designed to take advantage of the principles of locality. This principle states that once the data at a memory location is accessed, that data tends to be accessed again and that data near that memory address tends to be accessed in the near future.

Cache memory takes advantage of these principles to provide the CPU access to data stored in memory as efficiently as possible. There are several ways of designing caches to help facilitate this goal in a range of different scenarios. As with most software architecture—each comes with tradeoffs.

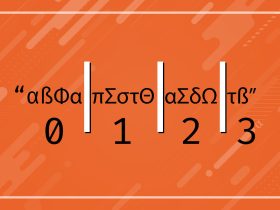

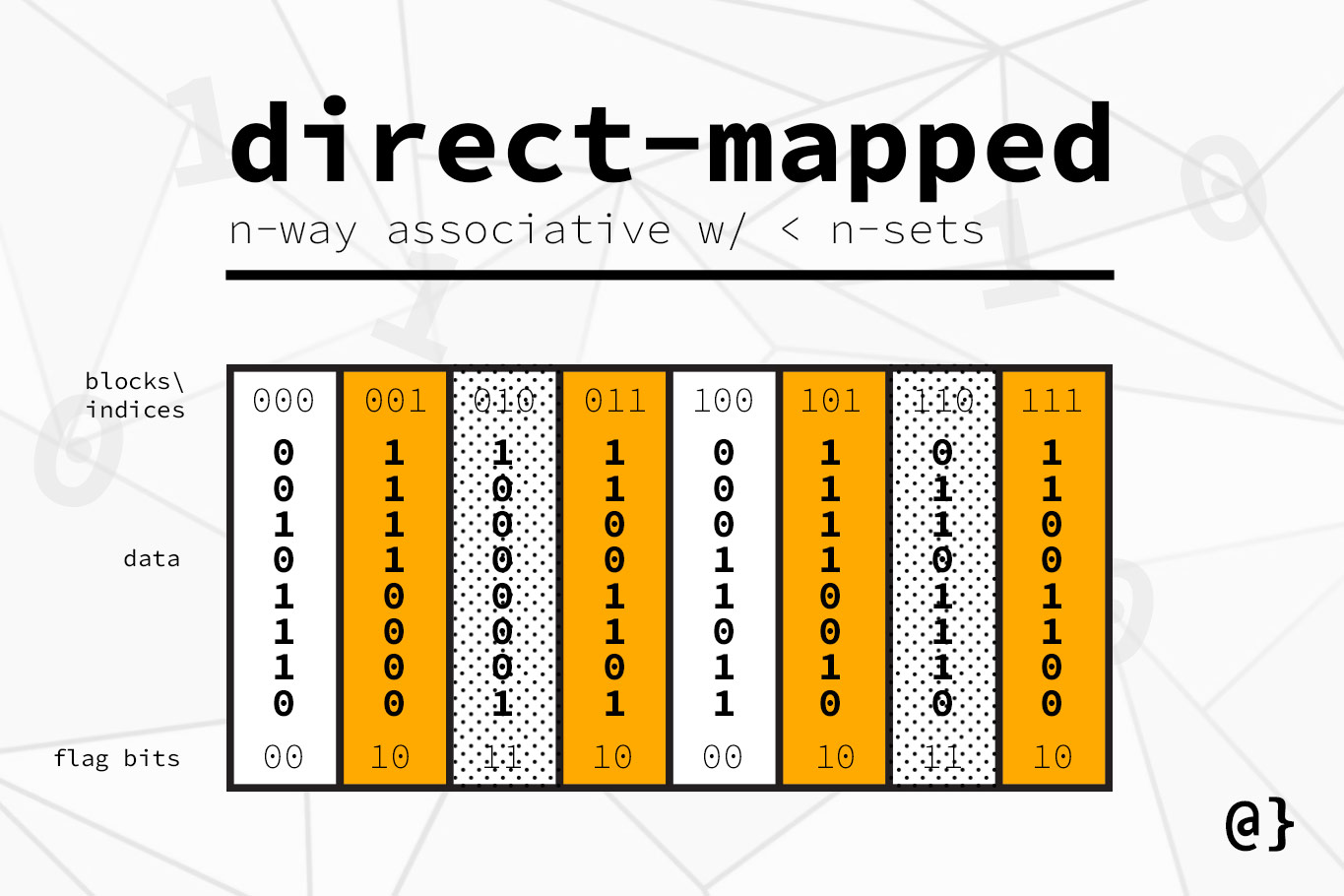

Direct Mapped

A single block of memory is mapped to a single block of the cache. The corresponding cache block can be calculated by mem_addr % # cache_block_count. This means the 5th block of memory would map to the 1st block of a 4 block cache because 5 % 4 = 1.

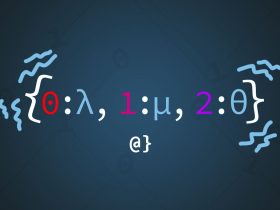

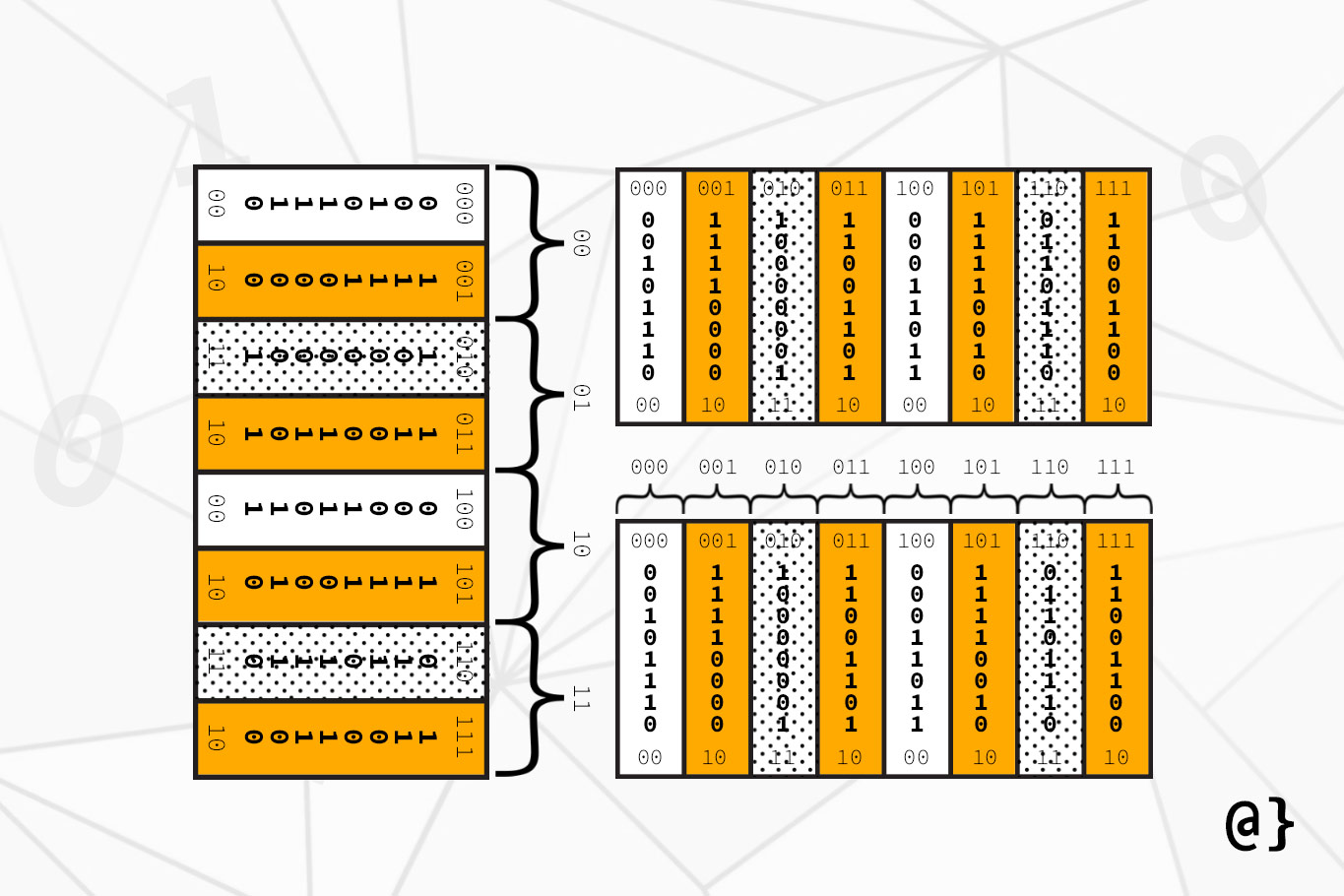

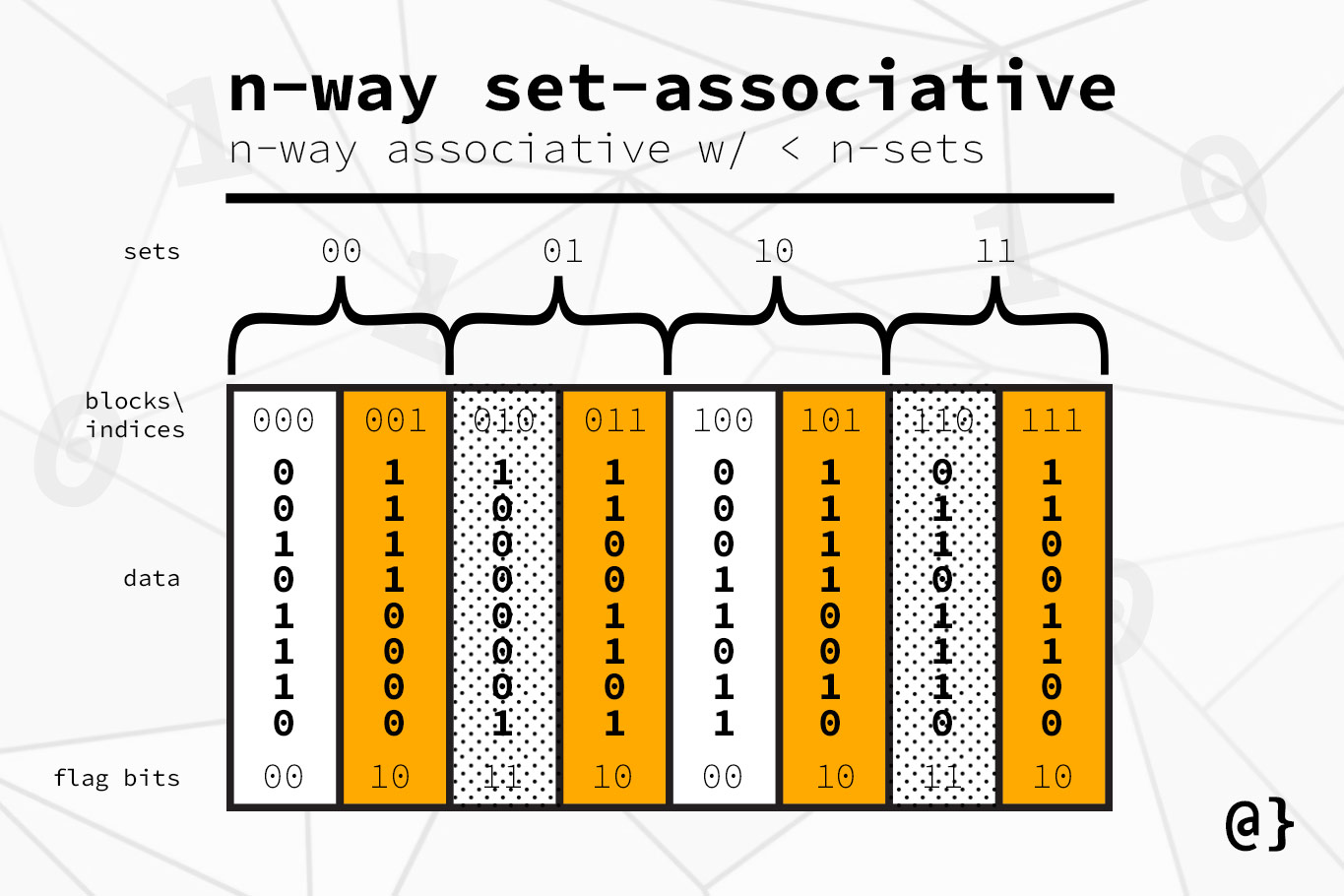

N-Set Associative

Set associative mapping divides the cache blocks into sets, such that a block of data from main memory could map to multiple positions in a single set. For example, if an 8-block cache were 2-way set associative, it would be divided into 4 sets of 2 blocks. Each of these sets could contain 2 blocks of data from main memory, differentiated by their tag numbers. Set associative indexing is calculated using mem_addr % cache_set_count.

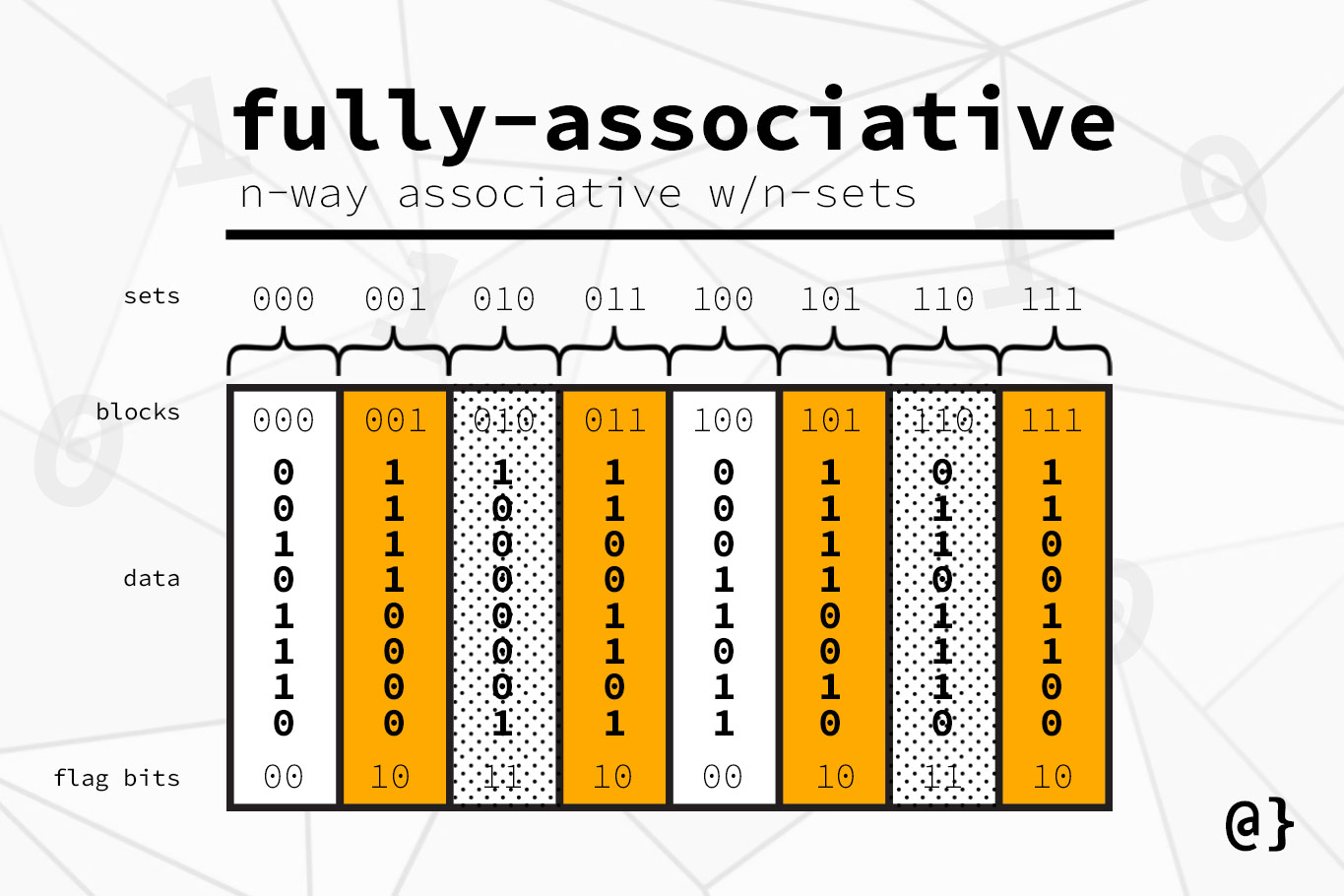

Fully Associative

Fully associative mapping is similar to N-Set Associative mapping, just that the number of sets in cache memory is equal to the number of blocks.

Where two-way associative mapping dictates 8 blocks of cache memory be subdivided into 4 blocks of two sets; fully associative mapping would dictate those 8 blocks of cache memory be subdivided into 8 sets of memory.

Effectively, this means that a block of memory from main memory could be written to any block in cache memory. This means lookups can be hardware-intensive and often inefficient.

Discussion

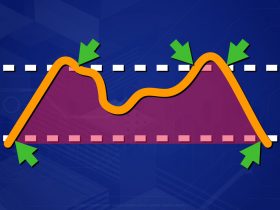

Cache-memory design involves a balance of size vs. performance. The types of cache memory discussed here represent two major approaches of cache memory design: n-way set associative and direct-mapped. Direct-mapped caches are much simpler to implement but result in lower performance.

N-way set-associative cache design allows flexibility and faster performance under common cases in modern computing. The extra resources required to implement this cache type are often more than justified by performance gains (R).