Network protocol layering is a system of service hierarchy used in networked computer communication. Each layer offers a set of guaranteed services to the layer above such that higher-level abstractions can be built while making assumptions about lower-level transport services.

These services allow ensuring a certain level of operability such that higher-layered protocols can take the lower-level features for granted. Another benefit is that developers of layer-specific applications can focus on their primary goals rather than wrestling with the functionality of other layers.

Introduction

For example, in TCP/IP communication, the transport layer offers a Reliable Data Protocol (RDP) service. This allows developers to focus on the logic of networked applications rather than the delivery of generated data.

For example, web browsers can use HTTP protocol—an application layer protocol—to ensure communication between clients and hosts are facilitated without data loss. In other words; the developers of Mozilla Firefox only have to focus on what data is sent from users to websites and not how that data is sent.

Different Layer Models

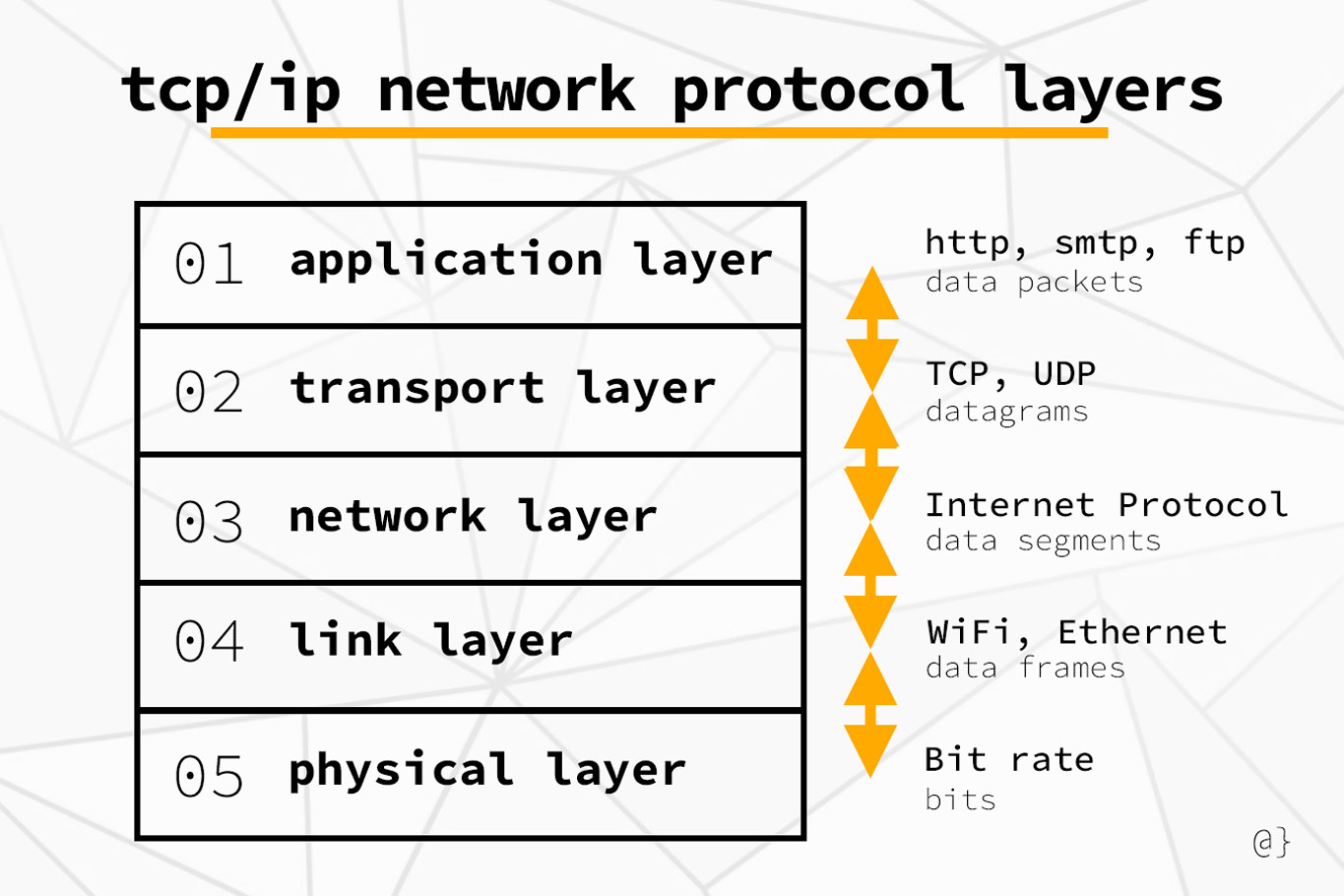

The two most popular network layer models as the OSI Stack and TCP/IP Stack. These two models are very similar but have some differences that are worth noting.

TCP/IP Protocol Stack

- Application

- Transport

- Network

- Link

- Physical

OSI Protocol Stack

- Application

- Presentation

- Session

- Transport

- Network

- Link

- Physical

Network Protocol Layers

Each network layer is responsible for maintaining a collection of protocols. These protocols make certain guarantees such that upper layers can focus on more abstract pursuits. For example, the application layer uses HTTP protocol, which uses the transport layers TCP protocol, which uses the network layer’s IP protocol all to ensure a website loads when you type the address into your web browser. This layered system helps from having to reinvent the wheel for higher-level applications.

Application Layer

Application layer protocols provide services to networked applications like web browsers, video streaming services, and email clients. Among the many services, the most popular/easily-recognized are as follows:

- HTTP – Used by web browsers

- SMTP – Used by email clients

- FTP – Used for file transfer

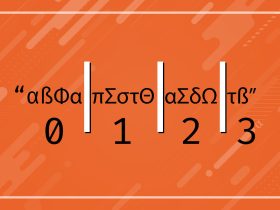

Other services on this layer include the domain name system (DNS) which allows users to used human-readable network names such as www.example.com instead of machine-friendly IP address notations such as 93.184.216.34. Application layer protocols are distributed across many end systems and available to a wide range of developers and users. The application layer uses a packet-oriented transmission.

Transport Layer

The transport layer transports application-layer data between application endpoints. For example, your computer and a website. Two common transport layer protocols are as follows:

- TCP – Used for reliable data transfer across connection-oriented applications

- UDP – Used for unreliable data transfer between connectionless applications

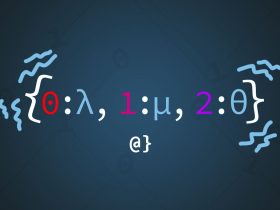

In protocols such as TCP, data can be broken down into smaller segments that can arrive out-of-order and then be re-ordered appropriately. Protocols like UDP, which are connectionless and focus more on delivery speeds, rely on application-layer services to sort things out. Packets received and transmitted by the transport layers are commonly referred to as segments.

Network Layer

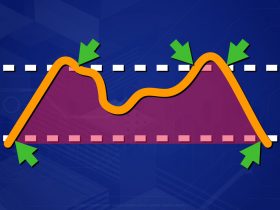

The network layer is less concerned with how data segments are handled and more concerned with the address from which they are being sent and the address to which they are to be delivered. For example; host A to host B. The Internet Protocol (IP) resides on the network layer.

The network layer pays attention to things like source IP address and target IP address as well as determining the optimal path across the network. This protocol defines how all modern Internet traffic is routed through networks. Data segments handled by the network layer are commonly referred to as datagrams.

Link Layer

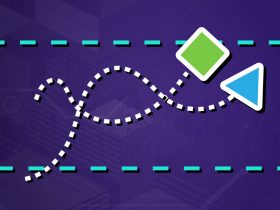

Data packets are transferred across the network layer from host to host. To facilitate this transfer, data comes into contact with many different routers before reaching an end system. At each link, the network layer passes the datagram down to the link layer for transport to the next link. When the next link receives the datagram, it’s passed back up to the network layer on that node. Common link-layer protocols include:

- WiFi (IPv4)

- Ethernet (IPv4)

- Neighbor Discovery Protocol (IPv6)

Computer networks are complex systems in which hierarchy is sometimes difficult to discern. As such, on the path between two networked end systems, a datagram may encounter several link-layer transitions using many different protocols. For example, a datagram may be transmitted from Ethernet from one router to the next and then via WiFi to the final end system. Data segments handled by the link layer are referred to as frames.

Physical Layer

Copper wires, Fiber optics, Radio, and even infrared—these are all physical medium on which data is transferred through the physical layer. In terms of individual network link variability; the physical layer exhibits the greatest variance.

Different protocols govern the interoperability between components largely based on the physical medium through which the data is being transferred. For example, there are different governing protocols for data transmission through copper, fiber, and radio.

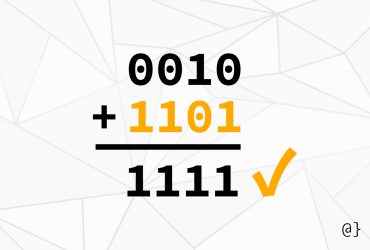

The physical layer is charged with transmitting single bits of data whereas upper layers transmit entire collections of data; data frames, datagrams, data segments, and data packets. The dynamics of physical layer transmission are more dependant on material physics than developer logic.

Discussion

Network protocol layering is a powerful abstraction by which developers and engineers can harness existing technology. Rather than re-implementing previous solutions, application developers can assume predictable functions based on previously-defined industry standards. This results in application developers focusing on user interactivity, ISPs focusing on service quality, and hardware manufacturers focusing on new and improved features.