Stop words are the most frequent words in a body of text that, in many cases, can be removed without detracting from the overall message. These words are often removed during natural language processing to improve search and other analytical efficiencies.

There is no universal list of stop words and contextual relevancy should never be disregarded. That’s to say—the most common words in a medical text are likely far different from the most common terms in sports literature.

Building a domain-specific stops words list can prove beneficial in nearly every NLP application. Functionally, it’s useful to have a core collection of stop words to start with.

What are Stop Words

Stop words are words that are so common to languages that removing them doesn’t affect the overall message enough to lose meaning. As such, Natural Language Processing (NLP) programs often disregard these terms as a means to improve contextual relevancy and performance. After all, fewer words to process mean less processing time.

This concept has become so popular, and useful, that every NLP pipeline I’ve ever worked with has some form of stop words filtering process. One of the commonest use cases is for search engines.

By removing frivolous terms, search queries can be done much faster and with greater relevancy. For example: searching for “what are stop words” is pretty similar to “stop words.”

Google thinks they’re so similar that they return the same Wikipedia and Stanford.edu articles for both terms. The application is clear enough, but the question of which words to remove arises.

Stop Word Lists

Not all stop word lists are created equally. Lists that are effective in one topical domain are ineffective (or maybe irrelevant) in others. However, even vastly different topical domains still share many similar words.

Words like the, of, to, in, a, and that are all common connective tissue within the English language, irrespective of topical domain. That’s to say—authors are likely to use these words regardless of what is being discussed.

With that in mind, one can appreciate how a common stop words list can offer utility. A list of common common words can serve well as a base to build more encompassing domain-specific filters. Let’s consider two-word frequency distributions from two very different sources:

1.Scientific Journal Article (biology)

The article Efflux pump inhibitors for bacterial pathogens: From bench to bedside was published in the February 2019 issue of the Indian Journal of Medical Research (R).

It contains phrases like “the characterization of many efflux pumps in Gram-positive bacteria,” “MC-207,110 [phenylalanyl arginyl β-naphthylamide (PAβN)], a peptidomimetic EPI, was the first to be discovered in 2001,” and “yridopyrimidine analogues D2 and D13-9001 have been reported as MexAB-OprM-specific pump inhibitor in MexABoverexpressing P. aeruginosa under both in vitro and in vivo conditions.”

Clearly—a very unique domain of discussion. Check out the frequency distribution of words:

# Top 10 most frequency words in Biology-domain text

('the', 315)

('of', 271)

('and', 162)

('to', 136)

('in', 105)

('a', 98)

('efflux', 86)

('s.', 78)

('that', 68)

('as', 65)

2.RFC for TCP Protocol (networking)

The first Request for Comments for Transmission Control Protocol (TCP) was made public in September 1981 (R). It would serve as the foundation for defining the most utilized transport-layer protocol in the modern web—while undergoing many revisions throughout the years.

This document contains phrases like “The sequence number of the first data octet in this segment (except when SYN is present). If SYN is present the sequence number is the initial sequence number (ISN) and the first data octet is ISN+1,” and “Among the variables stored in the TCB are the local and remote socket numbers, the security and

precedence of the connection, pointers to the user’s send and receive

buffers, pointers to the retransmit queue and to the current segment.”

Again—clearly a unique domain of discussion. Check out the frequency distribution of words from this text:

# Top 10 most frequency words in Networking-domain

('the', 1323)

('to', 460)

('a', 451)

('is', 415)

('of', 409)

('and', 332)

('in', 295)

('tcp', 219)

('be', 194)

('sequence', 162)

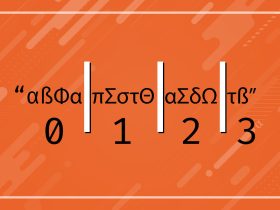

Note: Both of the above examples were minimally processed by normalizing whitespace, lowercasing text, and truncating to equal lengths.

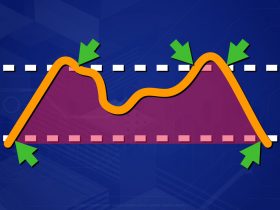

The Use of Stop Words

The examples above illustrate how even vastly different text domains can have remarkably similar common terms. Of the 20 terms (10 from each source) there are only three that stand out as domain-specific: tcp, sequence, and efflux.

For applications of Natural Language Processing (NLP) it is almost always useful to take some measure to filter out stop words. Not only does it decrease processing time (fewer words) but also helps to make domain-specific differences more apparent.

Let’s consider the above examples again, this time with stop words being filtered before frequency analysis.

1.Sccientific Journal Article with Stop Words Removed

# Top 10 most frequency terms after removal of stop words

('efflux', 86)

('s.', 78)

('aureus', 65)

('nora', 57)

('epis', 42)

('antibiotics', 40)

('pumps', 40)

('ciprofloxacin', 37)

('pump', 32)

('norfloxacin,', 29)

2.RFC for TCP Protocol with Stop Words Removed

# Top 10 most frequency terms after removal of stop words

('tcp', 219)

('sequence', 162)

('data', 151)

('connection', 143)

('segment', 141)

('control', 130)

('number', 94)

('1981transmission', 87)

('user', 83)

('send', 79)

We can see that things take on remarkably different forms here. These frequency distributions really speak to the differences in domain-specific language used in each document.

Stop Word Lists

Modern NLP pipelines often consist of fairly involved preprocessing pipelines—often with domain-specific considerations. One shared aspect of most pipelines I’ve developed or worked with is the application of stop word filtering at some point. Stemming and punctuation removal can be extremely useful as well.

All things considered, I still place basic stop word filtering among the most beneficial preprocessing steps. I recommend anyone serious about applied NLP develop personalized stop word lists catered to the domain in which work is being done. However, there are some popular stop word lists that can help get you started.

NLTK Stop Words

Python’s Natural Language Took Kit is arguably the most popular natural language processing library. It features lots of corpora (NLP reference texts) and easy APIs for common NLP tasks such as tokenizing, stemming, tagging, and classifying a wide range of text in a wide range of languages. Among the many useful NLTK features is the list of common stop words provided:

from nltk.corpus import stopwords

# Load language-specific stop words

stopwords.words('english')

['i', 'me', 'my', 'myself', 'we', 'our', 'ours', 'ourselves', 'you', 'your', 'yours', 'yourself', 'yourselves', 'he', 'him', 'his', 'himself', 'she', 'her', 'hers', 'herself', 'it', 'its', 'itself', 'they', 'them', 'their', 'theirs', 'themselves', 'what', 'which', 'who', 'whom', 'this', 'that', 'these', 'those', 'am', 'is', 'are', 'was', 'were', 'be', 'been', 'being', 'have', 'has', 'had', 'having', 'do', 'does', 'did', 'doing', 'a', 'an', 'the', 'and', 'but', 'if', 'or', 'because', 'as', 'until', 'while', 'of', 'at', 'by', 'for', 'with', 'about', 'against', 'between', 'into', 'through', 'during', 'before', 'after', 'above', 'below', 'to', 'from', 'up', 'down', 'in', 'out', 'on', 'off', 'over', 'under', 'again', 'further', 'then', 'once', 'here', 'there', 'when', 'where', 'why', 'how', 'all', 'any', 'both', 'each', 'few', 'more', 'most', 'other', 'some', 'such', 'no', 'nor', 'not', 'only', 'own', 'same', 'so', 'than', 'too', 'very', 's', 't', 'can', 'will', 'just', 'don', 'should', 'now']

PostgreSQL Stop Words

PostgreSQL is one of the most popular open-source database systems. It’s been around for more than 30 years, is supported by almost every major cloud provider, language framework, and programming language, and offers enterprise-grade relational operations (R)

As most database frameworks are, PostgreSQL is commonly used for textual searches. Users often include common words like and, that, the, a, with, and other similar stop words in their searches.

By excluding these terms, database queries can quickly become more performant. As one might imagine, the PostgreSQL framework comes with its own handy list of internal stop words for just such application:

# Postgres Stop Words

# C:\Program Files\PostgreSQL\{VERSION}\share\tsearch_data\english.stop

['i', 'me', 'my', 'myself', 'we', 'our', 'ours', 'ourselves', 'you', 'your', 'yours', 'yourself', 'yourselves', 'he', 'him', 'his', 'himself', 'she', 'her', 'hers', 'herself', 'it', 'its', 'itself', 'they', 'them', 'their', 'theirs', 'themselves', 'what', 'which', 'who', 'whom', 'this', 'that', 'these', 'those', 'am', 'is', 'are', 'was', 'were', 'be', 'been', 'being', 'have', 'has', 'had', 'having', 'do', 'does', 'did', 'doing', 'a', 'an', 'the', 'and', 'but', 'if', 'or', 'because', 'as', 'until', 'while', 'of', 'at', 'by', 'for', 'with', 'about', 'against', 'between', 'into', 'through', 'during', 'before', 'after', 'above', 'below', 'to', 'from', 'up', 'down', 'in', 'out', 'on', 'off', 'over', 'under', 'again', 'further', 'then', 'once', 'here', 'there', 'when', 'where', 'why', 'how', 'all', 'any', 'both', 'each', 'few', 'more', 'most', 'other', 'some', 'such', 'no', 'nor', 'not', 'only', 'own', 'same', 'so', 'than', 'too', 'very', 's', 't', 'can', 'will', 'just', 'don', 'should', 'now']

You’d have to be quite bored to notice, but these are the same lists—albeit in a different order. At the time of writing, I didn’t realize these two lists were identical. I wasn’t going to include redundant lists, but feel emphasizing two gigantic systems using such a simple list does well to illustrate the power of basic stop word filtering.

SpaCY Stop Words

SpaCy is billed as the “Industrial Strength Natural Language Processing” library. It also happens to be my favorite NLP library—and one of my favorite Python libraries period. It’s built from the ground-up for performance, has exasperatingly sensible object models, and churns through 1,000,000 words before most other libraries can even load their language models.

SpaCy is no exception to the use of stop words.

from spacy.lang.en.stop_words import STOP_WORDS # The following list was generated from the SpaCy stop words file. # It contains a slightly more dynamic approach than simple word lists. ['may', 'sometime', 'next', '‘ve', 'various', 'about', 'toward', 'others', 'of', 'behind', 'be', 'keep', 'less', 'amongst', 'beforehand', 'in', 'whoever', 'some', 'seemed', 'throughout', 'been', 'are', 'side', 'top', 'myself', 'anything', 'which', 'if', 'formerly', 'above', 'due', 'together', 'after', 'himself', 'last', "'re", 'how', 'out', 'please', 'being', 'on', 'five', 'call', 'anyhow', 'former', 'his', 'few', 'i', "'m", 'without', 'he', 'unless', 'ours', 'whether', 'six', 'almost', 'already', 'within', 'doing', '’ve', "n't", 'is', 'neither', 'during', 'will', 'yours', 'rather', 'all', 'have', 'whereupon', 'had', 'although', 'front', 'go', 'nine', 'n‘t', 'always', 'somehow', 'should', 'just', 'n’t', 'noone', 'whole', 'your', 'none', 'again', 'move', 'fifty', '’ll', 'cannot', 'a', 'did', 'when', 'beyond', 'except', 'nowhere', 'done', 'until', 'themselves', 'through', 'besides', 'ca', 'down', 'whereafter', 'its', 'since', 'take', "'ve", 'give', 'around', 'him', 'twelve', 'anyone', 'very', 'by', 'wherein', 'up', 'else', 'once', 'yourself', 'even', 'seem', 'whose', 'ten', 'latterly', 'we', 'not', 'name', 'forty', 'beside', 'her', '‘s', 'seeming', 'who', 'third', 'there', 'per', 'herein', 'nor', '’m', 'indeed', 'every', 'hereafter', 'part', 'three', 'thereafter', 'these', 'used', 'mine', 'does', 'mostly', 'more', 'so', 'me', 'could', 'it', 'our', 'everything', 'off', 'hundred', 'least', 'ourselves', 'see', 'and', 'same', 'becomes', 'twenty', 'upon', 'put', 'wherever', 'enough', 'own', 'across', 'most', 'under', 'though', 'well', 'becoming', 'below', 'anywhere', 'namely', 'everyone', 'over', 'otherwise', 'seems', '‘m', 'any', 'my', 'where', 'were', 'serious', 'therefore', 'to', '‘d', 'against', 'was', 'whatever', 'sometimes', '’s', 'but', 'either', 'hereby', 'full', 'do', 'become', "'s", 'perhaps', 'hers', '’d', 'however', 'meanwhile', 'that', 'four', 'hereupon', 'many', 'whence', 'might', 'into', 'amount', 'as', 'along', 'then', 'them', 'or', 'hence', 'only', 'for', 'she', 'anyway', 'whither', "'ll", 'elsewhere', 'also', 'both', 'can', 'show', 'quite', 'alone', 'here', 'too', 'empty', 'those', 'much', "'d", 'they', 'sixty', 'regarding', 'two', 'became', 'towards', 'thus', 'bottom', 'between', 'nothing', 'back', 'other', 'fifteen', '’re', 'eight', 'another', 'such', 'thru', 'among', 'has', 'before', 'get', 'why', 'ever', 'nevertheless', 'eleven', 'make', 'latter', 'thereby', 'yet', 'onto', 'us', 'something', 'what', 'often', 'itself', 'because', 'further', 'several', 'an', 'really', 'first', 'their', 'you', 'now', 'everywhere', 'someone', 'one', '‘re', 'at', 'using', 'thence', 'say', 'somewhere', 'nobody', 'thereupon', 'from', 'never', 'whenever', 'am', 're', 'whereas', 'would', 'afterwards', 'via', 'whereby', 'than', 'made', 'no', 'yourselves', 'herself', 'each', 'therein', '‘ll', 'moreover', 'whom', 'the', 'while', 'with', 'this', 'must', 'still']

SpaCy’s STOP_WORDS list is 199 words longer than the NLTK list. This is accounted for by words like further, although, meanwhile, and whereas. While these words might seem comparatively complex next to words like as, I, we, and us—none really offer much contextual benefit.

Final Thoughts

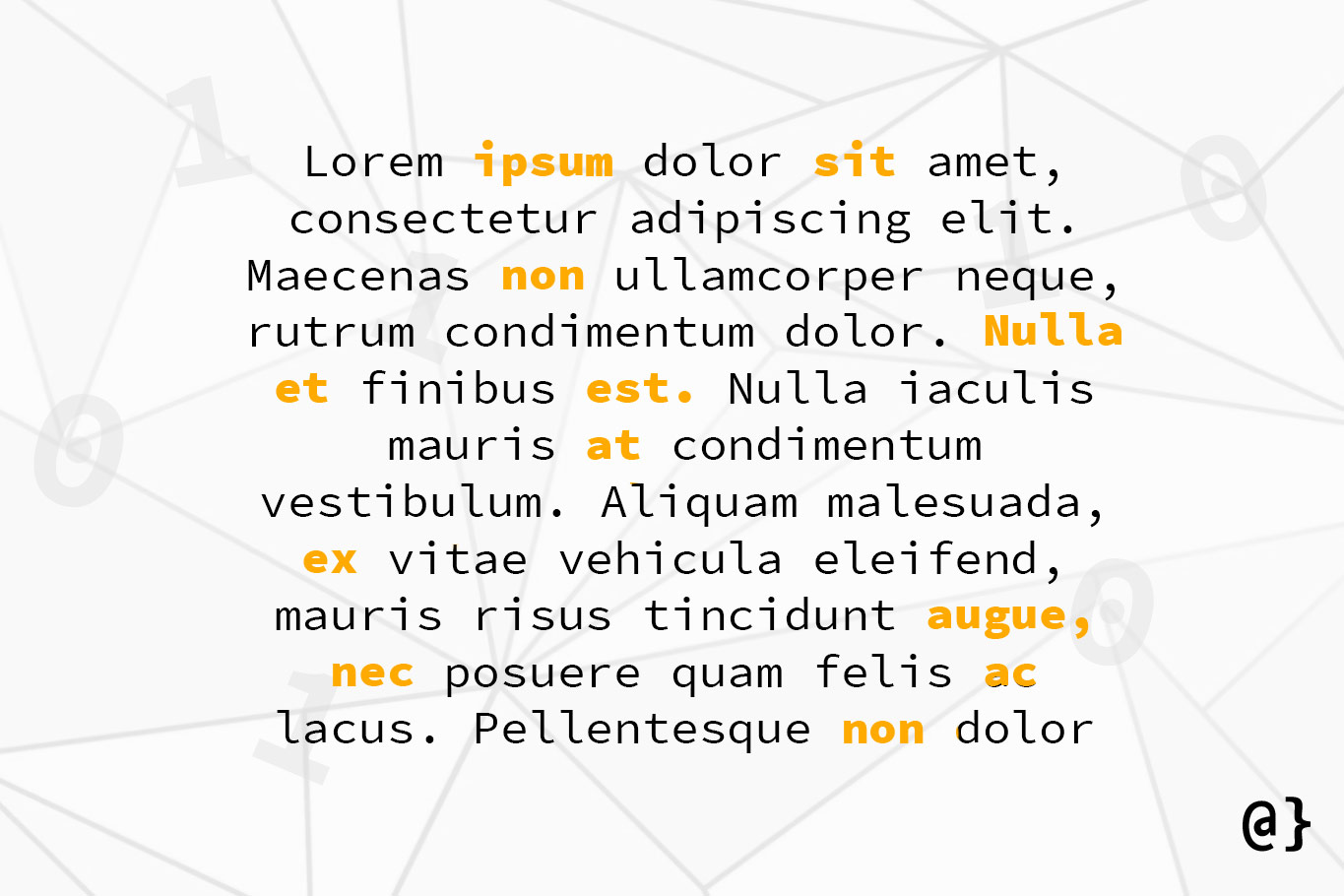

Stop words natural language processing efficiency textual domains. That sentence has zero stop words and still conveys some sense of what this article was about. Stop words are like the connective tissue of a language—they hold things together but, if viewed in their absence, one generally can still discern what’s going on.

In the application of Natural Language Processing, the filtering of stop words often equates to decreased processing times and increased relevancy. I’d encourage everyone working on NLP projects to take time to carefully develop domain-specific stop words and continually adjust as needed.